A brand new public resource, powered by Synthesia, aims to educate the wider public on the rapidly rising phenomenon of AI-generated media, drawing back the curtain on the world of deepfakes.

Seeing is believing… or is it? People have been manipulating content for hundreds of years but recent advances in AI have taken it to a whole new level of verisimilitude. Deepfake technology can transform lives. Quadriplegics regaining the ability to watch themselves dance. Personalised kids’ stories in their own language representing their communities. But there’s also great potential for harm too. Weaponised media dropping days before an election. Fraud and identity theft causing heartache and empty bank accounts. Trending non-consensual pornographic clips becoming a life sentence for youth, especially women. What happens when we can’t tell facts from fake news? Outright bans on deepfakes have been suggested and tested but a blanket approach to AI won’t work and we can’t run from the tidal wave of new technologies already splashing our shores. Deepfakes are a problem that everyone needs to engage with as the information apocalypse, the infocalypse, approaches.

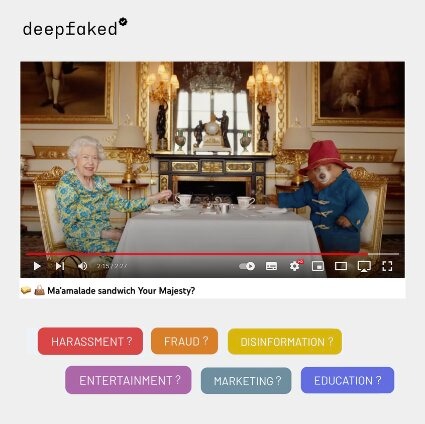

Synthesia, a company that creates synthetic videos from plain text in minutes (in more than 65 languages), unveiled a free educational platform to help people prebunk (preempt lies and fake news by debunking them in advance) deepfakes. Deepfaked.Video is an evolving public resource that seeks to catalogue and contextualise deepfake videos online, including instances that have been misidentified as deepfakes, to help raise awareness. It launches with more than 130 case studies exploring intent and genre to understand the purpose behind each one. Was it to educate, entertain or for marketing purposes or perhaps was the intent disinformation, harassment or fraud. Listing the genre as news, art, politics, satire, business, resurrection or pornography alongside expert commentary to help arm regulators, journalists and interested parties with the tools they need to identify what is and isn’t harmful AI-generated content. It includes a coalition of practitioners dedicated to debunking myths behind deepfakes.

Integral to coining ‘AI for good’ is Victor Riparbelli, CEO of Synthesia. He believes tech companies shouldn’t play down the risks of new technologies and need to be responsible for getting the wider public including law makers and journalists up to speed. “Synthetic media is shrouded in myths, making it difficult for regulators to take appropriate steps. Today, the public narrative is focused on ‘deepfakes’; a term generally used to describe nefarious use. But to regulate effectively, we need to make sure people have the full picture. Education is the first step,” Riparbelli said. “Only when AI radiates out to people everywhere will it get truly transformative. We can’t just have a few people knowing about deepfakes. It’s not until billions of people are informed on the good and the bad that deepfakes have to offer, will we be able to prevent further disinformation across the globe and provide a safer online space for the next generation.”

Online Google searches as well as the website data highlight not only a global interest and rise in AI technology, but with it, the need for tighter regulation as disinformation continues to increase. Since 2018, searches for terms related to ‘deepfakes’ increased by 201,124% and ‘making deepfakes’ spiked 161,627% meanwhile growth in the AI-generated media space for artistic apps such as Wombo and Reface skyrocketed 35,502,326% across the globe.

Contrary to the Silicon Valley mainstream, Riparbelli is pro-regulation and feels there are obvious gaps in today’s laws that allow perpetrators to go unpunished. He’s passionate about ensuring a safer online space especially for the next generation. “Once you look behind the headlines, it’s obvious that bad intent is the common denominator. We should regulate based on the intent, not the technology,” Riparbelli said. “These are not new issues and the legal framework exists, but we need stricter laws to punish malicious intent. People must be punished if they create or share non-consensual porn, bully or harass someone, discriminate against minorities, defraud others or cause disinformation amongst democracy.

Deepfake expert, Nina Schick, describes the technology as one of the most important revolutions in human communication but says it’s a cat-and-mouse game. People will continue to create malicious deepfakes whilst professionals attempt to combat it with new technologies. Schick believes prebunking is key. “We don’t ban email just because some use it with bad intent. Instead, we educated the public and applied technology solutions to limit bad faith use,” Schick says. “Governments should focus on using this as an educational tool. Take the Zelensky deepfake for example – thanks to proactively preempting, lies were quickly debunked and disinformation was dispelled by warning people about it before they see it.”

Deepfaked.Video is an interdisciplinary team of researchers, professors and creative thinkers. The coalition of practitioners involved include Victor Riparbelli, Synthesia CEO, Nina Schick, deepfakes expert and author, Siwei Lyu, Professor of Computer Science at Buffalo University, Luisa Verdoliva, Professor at University of Naples and visiting scientist at Google AI as well as Henry Adjer, leading expert on synthetic media.

Synthetic media or deepfakes – as AI technology develops and deepfakes become more sophisticated, making them is getting much easier and more popular. It’s safe to say seeing isn’t believing anymore. AI will amplify the issues we already have with spam, disinformation, fraud and harassment. Reducing this harm is crucial. Disinformation is a complex problem and there isn’t any simple technological solution. The first step is to better understand the issues at hand. Deepfaked.Video offers tools and techniques to help people prebunk to debunk deepfakes as the phenomenon continues to rise. The wider public are able to submit links to deepfakes where experts will then anaalyse and upload the new cases to help stop the spread of fake news.